VMware Cloud Foundation (VCF) has revolutionized data center virtualization by seamlessly integrating compute, storage, and networking components. In a VCF environment, the NSX platform provides crucial software-defined networking capabilities. At times, removing NSX edges becomes necessary due to infrastructure changes, optimization efforts, or other reasons. To simplify this process, VMware has introduced the NSX Edge Removal Tool. In this blog post, we will explore how this tool can streamline the removal of NSX edges in a VCF environment while preserving dependencies.

Understanding the NSX Edge Removal Tool

The NSX Edge Removal Tool is a powerful utility developed by VMware to assist with removing NSX edges in a VCF environment. It simplifies the edge removal process and ensures the preservation of critical dependencies. Let’s delve into the steps involved in using this tool effectively.

Step 1: Preparing for NSX Edge Removal

Before utilizing the NSX Edge Removal Tool, it is crucial to thoroughly understand your VCF environment and identify all dependencies associated with the NSX edges you plan to remove. Review your network configuration, firewall rules, security policies, and any applications or services relying on the edges. This assessment will help you plan and execute the edge removal process more efficiently.

Step 2: Installing and Configuring the NSX Edge Removal Tool

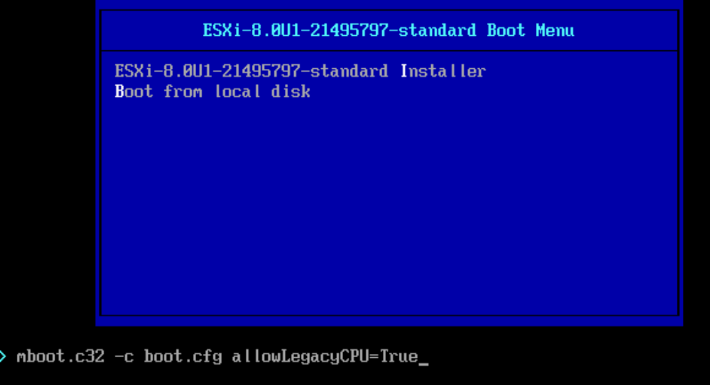

To begin, download the NSX Edge Removal Tool from the VMware website. As of the writing of this blog the latest download can be found here. Follow the installation and configuration instructions provided by VMware to integrate the tool into your VCF environment seamlessly. Ensure that you have the necessary credentials and permissions to access and modify the NSX edges. In my case I downloaded edge_cluster_cleaner_0.27.tar.gz and transferred it to the server.

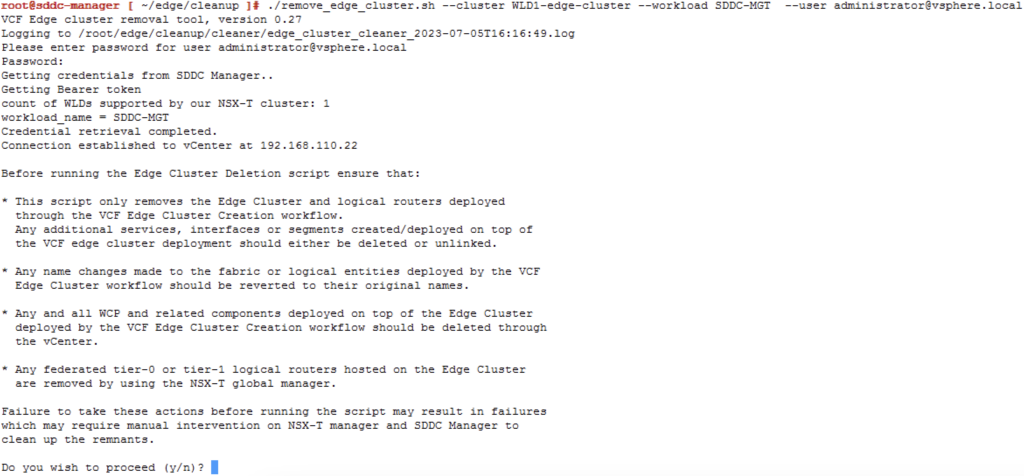

Step 3: Running the NSX Edge Removal Tool

Once the tool is installed and configured, it’s time to execute the removal process. Launch the NSX Edge Removal Tool and provide the required information, such as the NSX Manager IP address, credentials, and the specific edges you wish to remove. The tool will validate the environment and dependencies, ensuring a safe removal process. ex ./remove_edge_cluster.sh --cluster WLD1-edge-cluster --workload SDDC-MGT --user [email protected]

Step 4: Verifying and Analyzing the Dependency Report

After executing the removal process, the NSX Edge Removal Tool generates a dependency report. This report provides crucial insights into the dependencies associated with the removed NSX edges. Review the report thoroughly to understand any potential impacts on your network infrastructure and applications.

Step 5: Addressing Dependencies and Network Adjustments

Based on the generated dependency report, it’s essential to address the identified dependencies and make necessary adjustments to your network configuration. Collaborate with network administrators, application owners, and other stakeholders to migrate the dependencies to alternative network resources. Update firewall rules, adjust routing configurations, and ensure seamless connectivity for critical services.

Step 6: Post-Removal Validation and Testing

After addressing the dependencies and making the required adjustments, perform comprehensive validation and testing to ensure that the network connectivity and critical services are functioning optimally. Monitor the network closely for any abnormalities or performance issues, and address them promptly.

Conclusion

The NSX Edge Removal Tool provides a streamlined approach to removing NSX edges in a VMware Cloud Foundation (VCF) environment while preserving critical dependencies. By following the steps outlined in this blog post and utilizing the tool effectively, you can simplify the edge removal process and ensure the smooth operation of your VCF environment. Embrace this tool to optimize your network infrastructure and enhance the agility of your virtualized data center.