Windows systems are vulnerable to security threats and need to be regularly patched to protect against these threats. However, managing patches for a large number of Windows systems can be a tedious and time-consuming task. This is where SaltStack comes in to help.

SaltStack is a popular open-source configuration management and orchestration tool that can be used to manage Windows systems, including patch management. In this blog, we will discuss how to use SaltStack to patch Windows systems.

Installing the Salt Minion on Windows

Before you can use SaltStack to manage Windows systems, you need to install the Salt Minion software on each Windows system you want to manage. The Salt Minion is a lightweight software that allows the Salt Master to communicate with the Windows system and execute commands on it.

To install the Salt Minion on Windows, follow these steps:

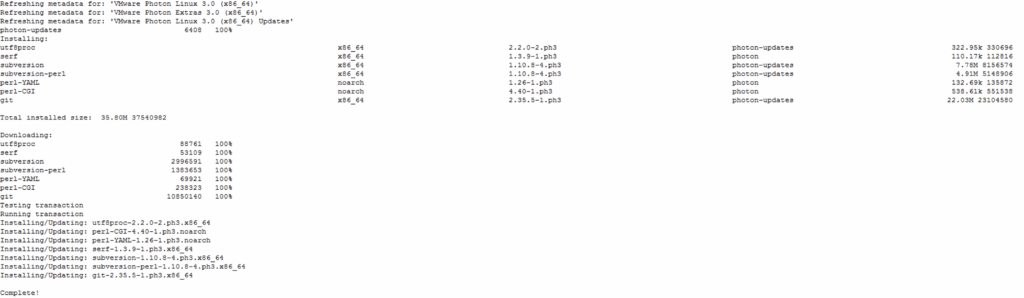

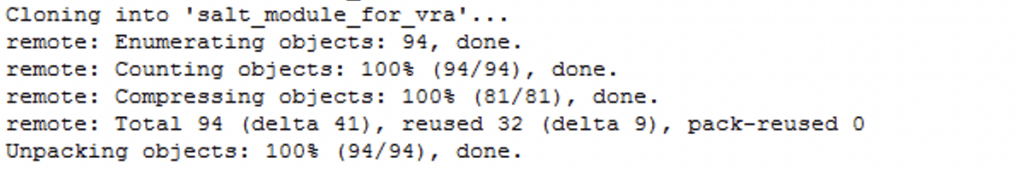

- Download the Salt Minion MSI package from the SaltStack website.

- Double-click the MSI package to start the installation process.

- Follow the on-screen instructions to complete the installation.

Once the installation is complete, the Salt Minion will be running on the Windows system and will be ready to receive commands from the Salt Master.

Using the Salt Command to Install Updates

Once the Salt Minion is installed on a Windows system, you can use the salt command to install updates. The salt command allows you to run the built-in win_update module on a specific Windows system to install updates.

For example, the following command will install all available updates on a Windows system with the ID “windows-server-01”:

salt windows-server-name cmd.run 'salt-call win_update.update'Using the win_updates State Module

SaltStack also provides the win_updates state module to manage updates on Windows systems. The win_updates state module allows you to define the desired state of your Windows systems, including which updates to install.

For example, the following command will install all available updates on all Windows systems managed by SaltStack:

salt '*' state.apply win_updatesUsing the winrepo Feature

SaltStack’s winrepo feature allows you to manage custom Windows updates and patch packages. This feature allows you to create a local repository of Windows updates and patches that can be easily distributed to all of your Windows systems.

For example, the following command will update the local repository of custom packages on all Windows systems managed by SaltStack:

salt '*' state.apply winrepo_updateConclusion

In this blog, we discussed how to use SaltStack to patch Windows systems. SaltStack provides a powerful and flexible solution for Windows patch management, allowing you to manage updates for a large number of Windows systems in an efficient and automated manner.

Whether you are managing a few Windows systems or hundreds, SaltStack is the ultimate tool for Windows patch management. So, start using SaltStack today and make your Windows patch management process a breeze!